5 things to be aware of when using AI for media and communications in the international development sector

AI tools like ChatGPT and image generation software are fast becoming part of modern working life.

In the international development sector there are ongoing discussions about the ethics of using AI to produce imagery and photography for INGO campaigns and the use of tools like ChatGPT to write grant applications and donor reports. One way or another, AI has the potential to revolutionise every corner of the sector.

So what do we need to know when using AI in our media and communications work?

1. AI tools can be used as a starting point for content creation but not the finished product

The first thing to know about the new generation of AI tools like ChatGPT is that they do not “think” like humans do, they predict. Known as “Generative AI” these tools process vast amounts of data from across the internet to respond to your prompt or question. They learn patterns to generate the most correct response when given a prompt. However, this is highly unlikely to give you a perfect finished product.

2. The ethics question

Currently there are many discussions and debates surrounding the ethics and safety of AI. As new innovations impact our daily lives – as we have seen with the case of social media over the last decade – regulation and safeguarding protections are needed.

In the INGO sector, a key ethical issue surrounds the use of image generation tools. AI image generation software combs through a vast dataset that is in the public domain of existing imagery, and produces new imagery based on what is available, so AI produced images are based on real images. The final product produced by the software will carry the same biases as the datasets or sources that have been used to create it. Also, it is important to be aware that any imagery created by online AI image generation software is not protected by copyright – legally, only human-authored works can be copyrighted.

3. Issue of bias

As AI tools draw from datasets that are publicly available, such as images and text content on the internet, they of course carry biases, and are not always factually correct. AI tools such as ChatGPT perpetuate discriminatory behaviours and can amplify racism, sexism, ableism, and other forms of discrimination, since they are currently unregulated. Therefore, it is vital that you are aware that any AI model you are interacting with will perpetuate these biases and discriminatory behaviours.

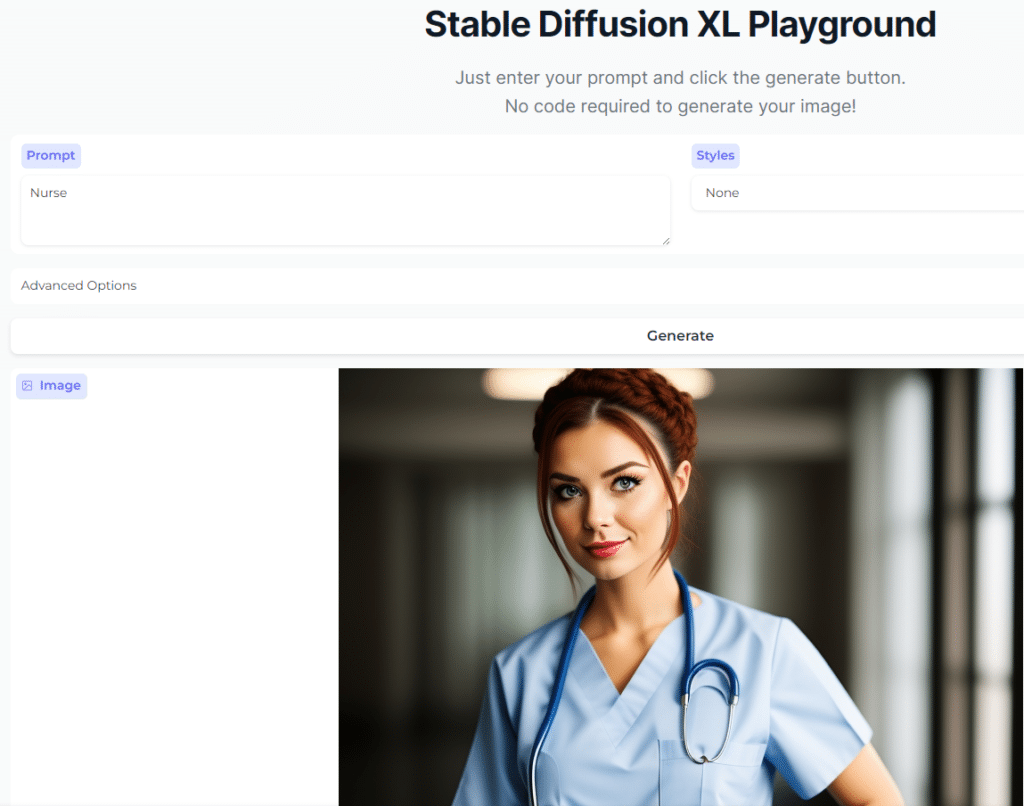

For example: If we were to ask the image generation tool, Stable Diffusion, to create an image of a “nurse” it will likely draw on stereotypes and the common image associated with the description, so in this case, a white woman with a stethoscope.

As these images perpetuate these biases and are computer generated, it is always better to use real, photographic images of living beings that capture real people and real emotions. If you’re looking to create a more conceptual or abstract image, AI image generation tools might then be a useful aid in your creation as you can experiment with what is possible.

Before you publish or publicly use any AI produced content, make sure you and your team always check the accuracy of what is produced.

4. The prompt is important

The process in which we get information out of these AI tools is by prompting them, known as prompt engineering. Prompt engineering is the process of structuring text that can be interpreted and understood by a generative AI model.

The more specific you are with the prompt and the more background context you provide, the better output you will get.

5. Using ChatGPT to prepare for press interviews

In our work as media professionals in the sector, we are often tasked with briefing our spokespeople on key issues ahead of a media interview. We can use AI tools like ChatGPT to help plan potential questions and reactions by looking at a specific subject-matter, interviewer or content of a publication, as well as the target audience of a media outlet.

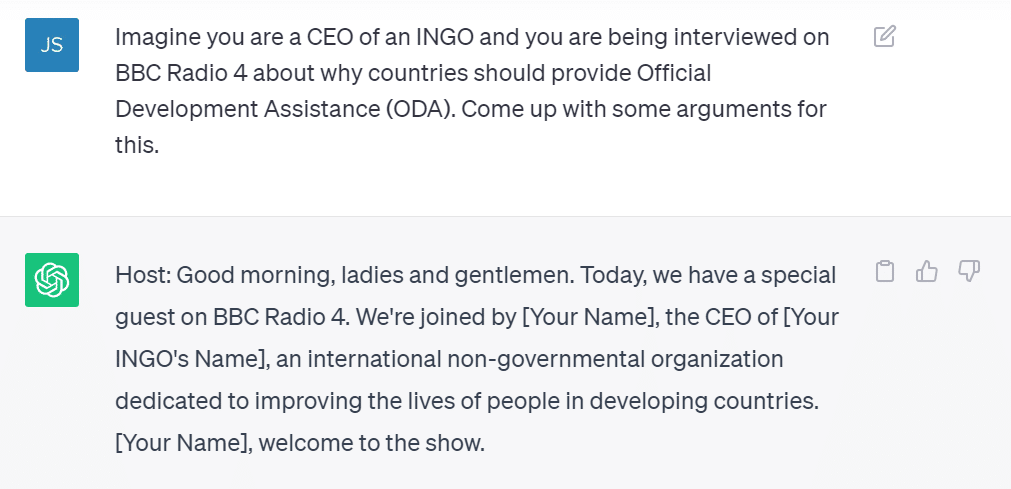

For example, if I were to ask ChatGPT to provide an argument for why countries should provide ODA. I would aim to provide as much context as possible on what I am looking for: what type of interview is it? Who are the target audience? See my prompt and what ChatGPT came up with below.

As you can see, what ChatGPT creates is a script of the imagined interview that draws on some of the key arguments on providing ODA. However, it uses some outdated language such as “developing” “developed” and “less fortunate” which demonstrates the limitations of ChatGPT and the lack of nuance by the tool and the prompt. One way round this could be asking in the prompt for the tool to refrain from using certain words.

If you were preparing your spokesperson for an interview with a prominent journalist, then you can also mention their name in the prompt to see examples of questions they might ask in their style such as LBC host Andrew Marr or BBC Radio 4’s Nick Robinson. Since these people are well known, the model knows enough about them to produce questions in their tone of voice, since it has access to public information about them on the internet.

As we have seen above, AI programmes like ChatGPT are useful for supporting our messaging creation, or predicting the type of questions journalists might ask in an interview, but always remember, AI tools are what they are: a tool, but not a solution. We cannot rely on them to produce all of our content or final products. But they can be used for as a springboard to get you started.

Category

News & ViewsThemes

Communications